Sketch to Color Image Generation Using Conditional GANs

Read my article on Medium

Sketch to Color Image generation is an image-to-image translation model using Conditional Generative Adversarial Networks as described in the original paper by Phillip Isola, Jun-Yan Zhu, Tinghui Zhou, Alexei A. Efros 2016, Image-to-Image Translation with Conditional Adversarial Networks.

The model was trained on the Anime Sketch-Colorization Pair Dataset available on Kaggle which contains 14.2k pairs of Sketch-Color Anime Images. The training of the model was done for 150 epochs which took approximately 23 hours on a single GeForce GTX 1060 6GB Graphic Card and 16 GB RAM.

Table of Contents

Requirements

To download and use this code, the minimum requirements are:

- Python 3.6 and above

- pip 19.0 or later

- Windows 7 or later (64-bit)

- Tensorflow 2.2 and above

- GPU support requires a CUDA®-enabled card

Usage

Once the requirements are checked, you can easily download this project and use it on your machine in few simple steps.

-

STEP 1

Download this repository as a zip file onto your machine and extract all the files from it.

-

STEP 2

Run the runModel.py file using python to see the solution

NOTE:

1 - You will have to change the path to dataset as per your machine environment on line #12. You can download the dataset from Kaggle at https://www.kaggle.com/ktaebum/anime-sketch-colorization-pair.

2 - GANs are resource intensive models so if you run into OutOfMemory or such erros, try customizing the variables as per your needs available from line #15 to #19

-

STEP 3

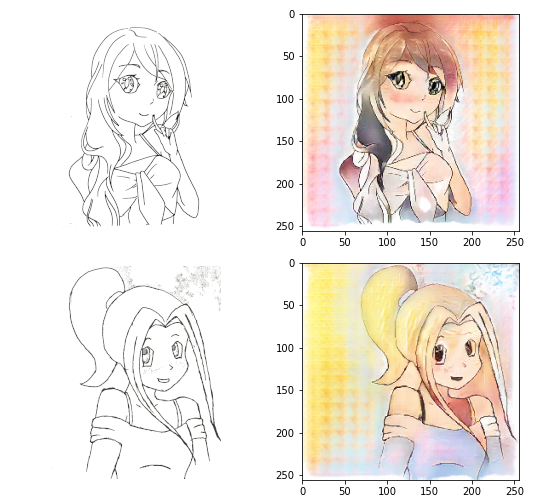

After the execution is complete, the generator model will be saved in your root direcrtory of the project asAnimeColorizationModelv1.h5file. You can use this model to directly generate colored images from any Black and White images in just a few seconds. Please note that the images used for training are digitally drawn sketches. So, use images with perfect white background to see near perfect results.You can see some of the results from hand drawn sketches shown below:

Future Works

I’ve been working on GANs for a lot of time and planning to continue exploring the field for further applications and research work. Some of the points that I think this project can grow or be a base for are listed below.

- Trying different databases to get an idea of preprocessing different types of images and building models specific to those input image types.

- This is a project applied on individual Image to Image translation. Further the model can be used to process black and white sketch video frames to generate colored videos.

- Converting the model from HDF5 to json and building interesting web apps using TensorFlow.Js.

Credits

-

some of the research papers that helped me understand the in-depth working of the Models are:

- Ian J. Goodfellow et al. 2014, Generative Adversarial Nets

- Mehdi Mirza, Simon Osindero 2014, Conditional Generative Adversarial Nets

- Phillip Isola, Jun-Yan Zhu, Tinghui Zhou, Alexei A. Efros 2018, Image-to-Image Translation with Conditional Adversarial Networks

-

The code is based on TensorFlow Tutorials avalaible on the website. The tutorials provided are intuitive and easy to follow.

-

The dataset can be easily downloaded from Kaggle website at https://www.kaggle.com/ktaebum/anime-sketch-colorization-pair.

Send Me Queries or Feedback

It is all opensource and for further research purpose, so contact me if you…

- …have some valuable inputs to share

- …want to work on it

- …find any bug

- …want to share a feedback on the work

- …etc.

Send me a mail at tejasmorkar@gmail.com or create a new Issue on this repository. You can also contact me through my LinkedIn Profile.

License

This project is freely available for free non-commercial/ commercial use, and may be redistributed under these conditions. Please, see the license for further details.